Keyword [MobileNetV3] [Handware-aware NAS] [NetAdapt]

Howard A, Sandler M, Chu G, et al. Searching for mobilenetv3[J]. arXiv preprint arXiv:1905.02244, 2019.

1. Overview

In this paper, it proposes MobileNetV3 (Larger and Small)

1) Handware-aware NAS for Block-wise Search

2) NetAdapt for Layer-wise Search (filter numbuer)

3) Hard-Swish. Nonlinearities

4) New efficient network design

5) Net efficient segmentation decoder Lite Ruduced Atrous Spatial Pyramid Pooling (LR-ASPP)

2. Network Search

2.1. Handware-aware NAS for Block-wise Search

Reward $ACC(m) \times [LAT(m)/TAR]^w$.

- Accuracy $ACC(m)$

- Latency $LAT(m)$

- each model $m$

1) Find that accuracy changes much more dramatically with latency for small model, so change $w$ from -0.07 to -0.15.

2) After find the initial seed model, then apply NetAdapt and other optimizations to obtain final MobileNetV3-Small model.

2.2. NetAdapt for Layer-wise Search

Two Type of Proposal:

- Reduce size of any expansion layer.

- Reduce bottleneck in all blocks that share the same bottleneck size - to maintain residual connections.

In NetAdapt, $T=10000$, $\delta = 0.01|L|$. $|L|$ is the latency of the seed model.

3. Network Improvements

3.1. Redesign Expensive Layers

1) Redesign last few layers to reduce 7 ms.

2) Reduce filter of early layer to save 2ms.

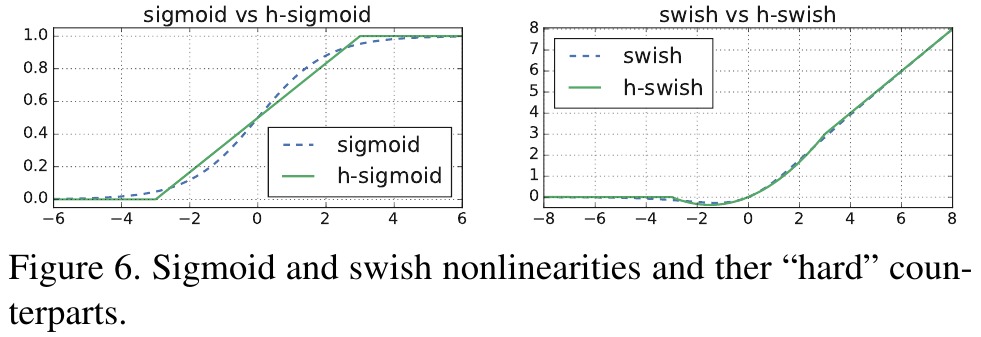

3.2. Nonlinearities

- $swish(x) = x \dot \sigma (x)$

- $h$-$swish(x)=x\frac{ReLU6(x+3)}{6}$

1) As sigmoid is much more expensive to computeon mobiledevices, replace it with piece-wise linear hard analog.

2) Find that most of the benifits swish are realized by using them only in deeper layers. So only use in the second half of the model.

3.3. SE Block

Set all to $1/4$.

4. MobileNet V3

4.1. Block

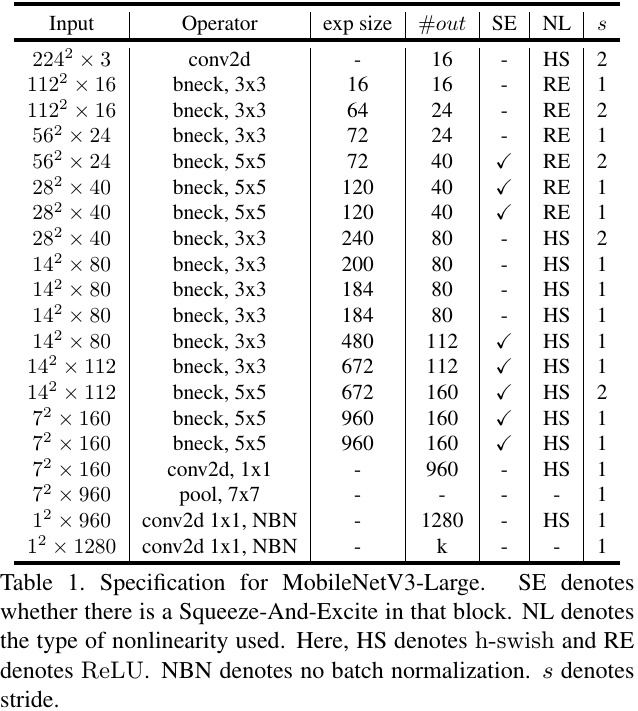

4.2. MobileNetV3-Large

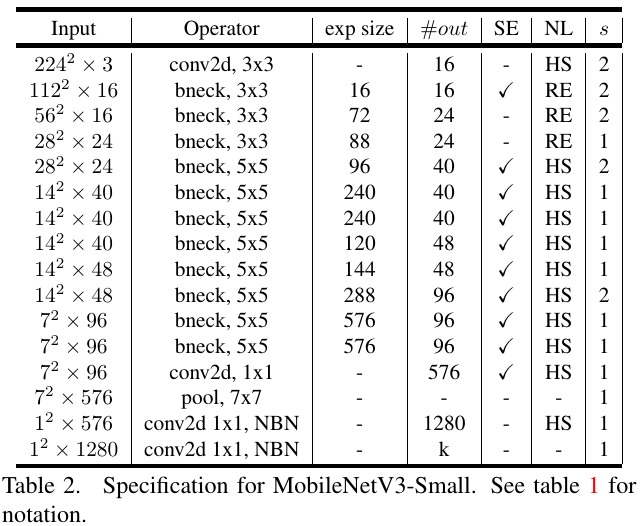

4.3. MobileNetV3-Small

4.4. LR-ASPP